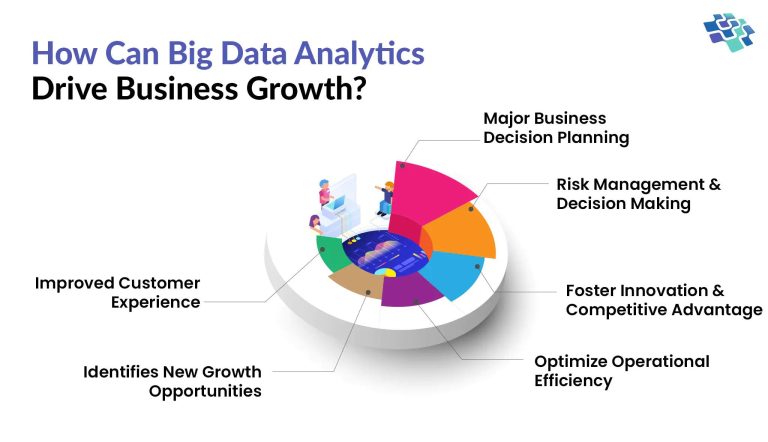

From boardrooms to factory floors, a new kind of executive adviser is gaining power: data. As companies navigate volatile markets, supply-chain shocks and shifting consumer behavior, decisions once steered by experience and intuition are increasingly driven by vast streams of information analyzed in real time.

The convergence of cloud computing, artificial intelligence and connected devices is turning raw data into operational guidance-pinpointing demand, setting prices, automating credit decisions and predicting equipment failures before they halt production. The trend is no longer confined to tech giants; falling costs and off‑the‑shelf analytics are bringing sophisticated tools to midmarket firms and startups, reshaping how capital is allocated and risks are managed across sectors from retail to healthcare to finance.

The pivot, however, comes with new pressures. Regulators are tightening oversight of data privacy and algorithmic accountability, while executives confront stubborn challenges-data quality, talent shortages and the need to explain model-driven choices to customers, employees and boards. As the race to harness big data accelerates, the winners will be those that translate insight into action without losing trust.

Table of Contents

- Big Data Powers Dynamic Pricing and Supply Chain Resilience With Predictive Models

- Real Time Decisions Require Robust Data Governance Data Quality and Explainable AI

- Adopt Cloud Lakehouse Architecture Data Contracts and Analytics Upskilling to Capture ROI

- In Conclusion

Big Data Powers Dynamic Pricing and Supply Chain Resilience With Predictive Models

As volatility reshapes commerce, enterprises are wiring real-time data into pricing engines and planning systems to respond within minutes, not months: streaming feeds from POS, e-commerce clickstreams, IoT trackers, and freight networks power models that forecast demand at granular levels, estimate price elasticity on the fly, and flag logistics disruptions before they cascade. Retailers and manufacturers are pairing reinforcement learning with constrained optimization to set market-responsive prices under margin and fairness guardrails, while recalibrating safety stock, reorder points, and production schedules to blunt shocks. Early deployments cite higher price realization, fewer stockouts, faster turns, and better OTIF as data pipelines, feature stores, and API hooks into ERP/OMS/WMS bring decisions closer to the edge; analysts note that durable advantage now depends on disciplined MLOps, scenario stress-testing via digital twins, and governance that curbs bullwhip effects and opaque price discrimination.

- Signals: competitor prices, promotions, weather/events, port congestion, supplier lead times, social sentiment.

- Pricing playbook: elasticity curves, demand shaping, A/B and multi-armed bandits, floors/ceilings with fairness guardrails.

- Resilience levers: dynamic safety stock, alternate sourcing, capacity rebalancing, route re-optimization, vendor-risk anomaly detection.

- Architecture: streaming ingestion, feature stores, explainable models, human-in-the-loop approvals, audit trails.

- KPIs to monitor: MAPE, price realization, contribution margin per constraint, fill rate, OTIF, inventory turns, working capital.

Real Time Decisions Require Robust Data Governance Data Quality and Explainable AI

Enterprises accelerating from batch analytics to split-second actions are formalizing guardrails that make automation defensible: machine-readable policies, verifiable data health, and transparent model reasoning embedded directly in production workflows. Industry operators are wiring event streams to governance catalogs, stamping each record with lineage and quality scores, and binding decision thresholds to these signals so interventions pause or reroute when confidence drops. Early adopters in finance, retail, and logistics report measurable gains-fewer false positives, faster dispute resolution, and shorter incident windows-when frontline teams can view the “why” behind a model’s choice alongside audit-ready evidence.

- Policy-as-code governance: granular access controls, PII tagging, retention rules, and automated enforcement across pipelines.

- Real-time data quality: schema contracts, freshness and completeness SLAs, anomaly detection, and upstream incident alerts.

- Explainability at the edge: feature attributions, decision reason codes, and user-facing narratives integrated into operational tools.

- Continuous risk checks: drift and bias monitoring, shadow deployments, kill switches, and human-in-the-loop escalation paths.

- Accountability and auditability: clear ownership, change logs, immutable lineage, and evidence trails for regulators and customers.

Adopt Cloud Lakehouse Architecture Data Contracts and Analytics Upskilling to Capture ROI

In a bid to turn sprawling data estates into measurable business outcomes, leading enterprises are consolidating analytics on a cloud lakehouse foundation, formalizing data contracts to enforce reliability at source, and prioritizing analytics upskilling to close the last-mile gap from insight to action; the combined effect is faster time-to-value, stronger governance, and clearer attribution of ROI through decoupled storage/compute, open table formats, and product-aligned ownership that treats data as a first-class asset.

- Operationalize the lakehouse: Standardize on open tables, optimize query routing, and separate hot/cold tiers to cut cost-per-insight without sacrificing performance.

- Codify data contracts: Define schemas, SLOs, and lineage at the producer interface; automate validation, versioning, and backward compatibility checks in CI/CD.

- Govern with FinOps: Apply budget guardrails, auto-suspend idle workloads, and publish unit economics (cost per dashboard, per model, per domain).

- Upskill the workforce: Launch role-based learning paths for analysts, engineers, and product teams-covering SQL, Python, dbt, notebooks, and BI storytelling.

- Measure what matters: Track reduction in failed pipelines, time-to-insight, data consumer NPS, and reuse rates of certified datasets and features.

In Conclusion

As data volumes surge and analytical tools mature, big data is moving from experimental pilots to the core of how companies plan, price, hire, and serve customers. Executives across finance, retail, healthcare, and manufacturing are tying analytics to measurable outcomes, while regulators increase scrutiny on privacy, bias, and security. The result is a faster decision cycle-and a higher bar for transparency and governance.

The divide is likely to widen between firms that turn data into timely, trusted insight and those still untangling silos and quality issues. Real-time streams, edge computing, and AI models promise competitive gains, but they also raise questions about accountability and cost. For now, the consensus in boardrooms is clear: big data is no longer optional. The advantage will belong to organizations that pair scale with stewardship, and speed with explainability.