A viral video can reach millions before breakfast. Behind the scenes, automated systems now scan its frames, transcribe its audio, and weigh its context against sprawling rulebooks-all in seconds. As short‑form clips and live streams overtake text as the default language of social media, platforms are leaning on increasingly sophisticated artificial intelligence to police what users see.

The shift is both technological and political. Vision-and-language models promise to spot hate speech, graphic violence, and deepfakes at scale, while advertisers demand brand safety and regulators in Europe and the United States push for faster removals and greater transparency. Yet speed comes with trade-offs: accuracy varies across languages and dialects; context can be lost in satire or news; and wrongful takedowns fuel debates over bias and free expression. Human moderators still sit in the loop for edge cases and appeals, but AI is setting the pace-and the queue.

With elections, wars, and synthetic media testing the limits of current tools, the stakes are rising. New standards for content provenance, watermarking, and auditability are emerging, even as encrypted channels and live broadcasts stretch enforcement to the edge. This article examines how AI is reshaping video moderation on the world’s biggest platforms-what’s working, where it falls short, and what the next wave of tools means for creators, companies, and the public square.

Table of Contents

- AI Takes the First Pass on Viral Clips: Multimodal models, on device filters and real time triage at scale

- The Blind Spots in Automated Moderation: Context loss, cross language nuance, bias drift and adversarial deepfakes

- Action Plan for Platforms: Human in the loop review, transparent appeals, risk based thresholds, region specific taxonomies, provenance watermarks and continuous red teaming

- The Way Forward

AI Takes the First Pass on Viral Clips: Multimodal models, on device filters and real time triage at scale

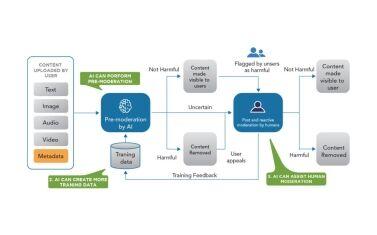

As short-form uploads spike by the millions per hour, platforms are quietly moving the first layer of screening to algorithms that fuse vision, audio and text, using lightweight, on-device filters to pre-empt obvious violations and server-side models to score risk in near real time; the result is a triage system that can blur or age-gate a clip before it trends, throttle distribution while a human double-checks, and surface likely harms-self-harm cues, hazing, coordinated abuse, deceptive edits-without exposing private data or adding perceptible delay to the feed.

- Multimodal parsing: frame-by-frame analysis, ASR and OCR combine with caption/hashtag parsing to catch signals that single-channel models miss.

- On-device prefilters: compact classifiers flag nudity, graphic violence and known contraband offline, reducing server load and preserving latency and privacy.

- Real-time triage: severity scores, confidence bands and velocity-of-share metrics trigger actions from soft interventions (warnings, age gates) to temporary distribution holds.

- Adversarial resilience: robustness to crops, pitch shifts and meme-style overlays, plus hash-matching and watermark detection for reuploads and deepfakes.

- Human-in-the-loop: escalations route to specialist reviewers by language and domain, with active learning feeding back hard cases to retrain models.

- Transparency levers: creator notices, dispute workflows and policy-aligned explanations reduce overreach and help calibrate false positives at scale.

The Blind Spots in Automated Moderation: Context loss, cross language nuance, bias drift and adversarial deepfakes

Amid rising enforcement demands, platform auditors and safety teams acknowledge that automated video review continues to miss meaning when signals depend on setting, speaker intent, or rapidly evolving visual tropes; internal testing and third‑party probes point to models that over‑remove satire while under‑flagging coordinated harassment, falter on code‑switched speech and dialects, drift in fairness as data pipelines shift, and face an arms race with synthetic media engineered to fool detectors.

- Context collapse: Short clips detached from creator history or surrounding conversation yield false alarms for hate and violence, while credible threats in longer streams go undetected.

- Multilingual nuance: Dialects, reclaimed slurs, and sarcasm in non‑English or mixed‑language posts push error rates higher in underserved markets.

- Bias drift: Subtle changes in training data and feedback loops recalibrate thresholds over time, disproportionately affecting minority communities and new creators.

- Adversarial deepfakes: Low‑latency face/voice swaps and text‑to‑video spoofs blend compression noise, watermark removal, and prompt obfuscation to evade current classifiers.

Action Plan for Platforms: Human in the loop review, transparent appeals, risk based thresholds, region specific taxonomies, provenance watermarks and continuous red teaming

Major platforms are shifting from blunt, one-size-fits-all takedowns to auditable workflows that blend machine speed with accountable oversight, emphasizing verifiable signals, local context, and user due process to meet regulatory expectations and preserve trust at scale.

- Human-in-the-loop review: Route edge cases to trained reviewers with time-bound SLAs, dual-approval on high-risk calls, and expert panels for sensitive categories to curb false positives.

- Transparent appeals: Provide case IDs, plain-language rationales, evidence snapshots, and outcome notifications, with reinstatement metrics and appeal turnaround times reported publicly.

- Risk-based thresholds: Calibrate model confidence by harm severity, virality potential, and user vulnerability; use shadow evaluations and holdouts before global rollouts to minimize over-enforcement.

- Region-specific taxonomies: Maintain localized policy ontologies reflecting legal and cultural norms, backed by multilingual labeling, community advisory inputs, and jurisdiction-aware enforcement.

- Provenance watermarks: Adopt C2PA-style content credentials, device-side signing, and resilient visible/invisible marks; flag unverifiable media and reduce distribution while preserving user context.

- Continuous red teaming: Run adversarial probes, synthetic abuse simulations, and coordinated brigading drills; fund bounty programs and share indicators through industry consortia for rapid collective defense.

The Way Forward

As AI shifts from support tool to front-line gatekeeper, it is redefining how fast and how far platforms can act on video content. The technology delivers unprecedented scale and speed, but it also sharpens long-running trade-offs over accuracy, context, and due process-especially around elections, conflicts, child safety, and synthetic media.

Regulators are moving to formalize those expectations with audit, transparency, and appeal requirements, while platforms invest in multimodal models, provenance tags, and cross-industry hash sharing. Adversaries are adapting in parallel, ensuring human oversight remains central for edge cases and cultural nuance. Increasingly, the question is less whether AI can moderate and more how it will be governed, measured, and held to account. As major events loom, the balance between rapid enforcement and users’ rights is likely to define the next phase of social media video moderation.