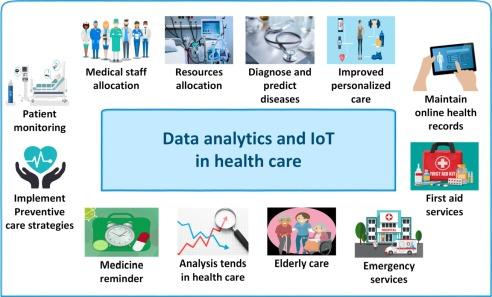

Public health agencies are increasingly turning to data analytics to track and prevent disease spread, building early-warning systems that analyze signals from hospitals, laboratories, wastewater, and mobility patterns in near real time. The shift, accelerated by the COVID-19 pandemic, is reshaping how officials detect outbreaks, forecast surges, and target interventions such as vaccinations, testing, and antiviral distribution.

Governments and researchers say the approach is already shortening response times and improving resource allocation, but it also raises challenges over data quality, privacy, and the uneven digital infrastructure across regions. As investment grows, health departments are racing to integrate disparate data streams and standardize reporting-steps seen as critical to catching the next wave before it crests.

Table of Contents

- Real Time Mobility and Contact Patterns Reveal Hidden Chains of Transmission

- Combining Electronic Health Records Wastewater Signals and Search Trends Speeds Early Detection

- Establish Common Data Standards and Interagency Sharing Agreements to Cut Reporting Delays

- Invest in Wastewater Surveillance Local Analytics Talent and Trigger Based Dashboards for Rapid Response

- In Summary

Real Time Mobility and Contact Patterns Reveal Hidden Chains of Transmission

Near-real-time mobility signals-from anonymized location pings, transit gate data, and opt-in Bluetooth co-location metrics-are being fused with clinic visit timestamps to map probabilistic exposure paths that traditional case investigations miss. Using temporal network analysis and graph-based anomaly detection, analysts identify clusters where people intersect across venues and time windows, flagging likely transmission links days before lab confirmations. Agencies report that privacy-by-design pipelines-including aggregation thresholds and on-device processing-are reducing re-identification risk while preserving epidemiological utility. Key indicators now under watch include:

- Inter-neighborhood flow shifts after major events or weather disruptions

- Co-location density spikes in workplaces, transit hubs, and night-time venues

- Dwell-time anomalies that extend exposure windows in high-risk settings

- Cross-border commuter corridors linking outbreaks across jurisdictions

- Temporal “bridges” where low-incidence areas connect to active clusters

Public health units are operationalizing these findings with precision interventions that aim to curb spread while minimizing disruption. Verified signals feed into dashboards that trigger rapid actions, coordinated via data-sharing MOUs and continuously audited access logs. Officials say the approach shortens the interval between signal and response, enabling targeted measures rather than broad restrictions. Recent responses include:

- Pop-up testing and rapid screening deployed along identified transit nodes

- Wastewater sampling intensification at facilities near inferred clusters

- Targeted outreach to workplaces and venues with repeated co-location patterns

- Adaptive transit scheduling to relieve peak-density pinch points

- Resource pre-positioning for hospitals in corridors showing rising risk

Combining Electronic Health Records Wastewater Signals and Search Trends Speeds Early Detection

Health agencies are increasingly fusing clinical, environmental, and digital behavior data to spot outbreaks before case counts rise. By aligning time-stamped care encounters with sewer-based pathogen signals and real-time web interest, analysts are surfacing anomalies earlier and with greater geographic precision. Each stream contributes a distinct lens on transmission dynamics:

- Electronic health records: De-identified chief complaints, test orders, and admission patterns reveal shifts in symptom clusters and severity across facilities.

- Wastewater surveillance: Community-level viral load and variant markers act as a population baseline, including where diagnostic testing is limited.

- Search trend signals: Spikes in symptom and treatment queries offer rapid, location-aware context, tempered with controls for seasonality and media effects.

When cross-validated and modeled together, these sources function as an early-warning radar-often narrowing the detection window from weeks to days-while maintaining privacy through aggregation and governance. The integrated feed supports more timely, targeted responses that can dampen transmission and reduce strain on hospitals:

- Faster alerts for local surges, with thresholds tuned to historical baselines and day-of-week effects.

- Targeted outreach to high-risk neighborhoods, informed by sewer catchment areas and clinic volumes.

- Resource staging for staffing, therapeutics, and bed capacity based on forecasted admission curves.

- Actionable guidance for schools and workplaces, including ventilation, testing, and vaccination timing.

Establish Common Data Standards and Interagency Sharing Agreements to Cut Reporting Delays

Fragmented formats and one-off interfaces slow case reconciliation when minutes matter. Converging on well-known healthcare vocabularies and interoperable APIs enables real-time exchange, fewer manual fixes, and faster situational awareness across labs, hospitals, and public health. A practical path centers on a common schema, consistent identifiers, and machine-readable governance that automate validation at the point of capture and at ingestion.

- Transport and schema: HL7 FHIR/REST or secure messaging with versioned resources; JSON as the default payload.

- Code sets: LOINC for tests, SNOMED CT for clinical concepts, ICD-10 for diagnoses, UCUM for units.

- Identifiers: Patient linkage via privacy-preserving tokens, facility NPI/CLIA, device and lot numbers, standardized location (ISO country, FIPS/census tract).

- Metadata minimums: Collection and result timestamps, specimen type, method, confidence/quality flags, reporter role, and schema version.

- Quality gates: Automated conformance checks, referential integrity, and deduplication rules baked into pipelines.

To move data at the speed of an outbreak, agencies also need clear legal and operational rails. Memoranda of understanding and data use agreements can codify purpose-limited sharing, role-based access, and reciprocity across jurisdictions, while zero-trust controls and audit trails provide accountability. Event-driven exchanges-secure publish/subscribe channels and webhooks-cut polling delays and ensure high-fidelity updates reach the right teams first.

- Scope and lawful basis: Public health purposes, minimum necessary fields, retention windows, and permitted re-use.

- Security and privacy: Encryption in transit/at rest, access tiers, consent where required, breach notification timelines.

- Operational commitments: API SLAs, outage reporting, contact escalation, and change-management for schema versions.

- Governance: Joint data stewardship, dispute resolution, audit logging, and periodic review of data quality metrics.

- Continuity: Sandbox environments, conformance testing, and disaster recovery to maintain reporting during surges.

Invest in Wastewater Surveillance Local Analytics Talent and Trigger Based Dashboards for Rapid Response

Public health agencies are moving sewage metrics from pilot projects to core infrastructure, treating them as a leading indicator that complements clinical reporting. The shift hinges on two pillars: scalable collection and lab workflows, and local analytics talent embedded within health departments to interpret signals against community context. Analysts trained in epidemiology, geospatial methods, and environmental data science translate viral load curves into actionable insights, accounting for catchment boundaries, population shifts, industrial confounders, and seasonality. With standardized metadata and rapid turnaround from sample to result, wastewater trends flag emerging hotspots days before hospitalizations rise, enabling targeted outreach rather than blanket restrictions.

- Sampling and lab capacity: sentinel sites by sewershed, composite sampling, multiplex qPCR plus selective sequencing for variant tracking.

- Quality and comparability: method controls, cross-lab proficiency testing, and common data dictionaries to normalize across jurisdictions.

- Equity and siting: coverage for underserved neighborhoods, schools, shelters, and congregate settings to reduce blind spots.

- Workforce: funded analyst positions, fellowships with utility partners, and reproducible pipelines in open tools for continuity.

- Governance: privacy-preserving aggregation, clear data-sharing MOUs, and open APIs for researchers and local responders.

- Trigger-based dashboards: pre-registered thresholds-e.g., week-over-week viral load growth, sustained exceedance of baselines, variant detection-auto-notify response teams.

Dashboards built on trigger thresholds convert complex trends into rapid decisions: when growth rates cross predefined bands or a new lineage appears, alerts route to schools, hospitals, and wastewater utilities with playbooks attached. Integrating corroborating feeds-over-the-counter test sales, syndromic visits, air quality in high-risk facilities-reduces false positives and supports rapid response such as pop-up testing, surge vaccination, ventilation checks, and targeted communications in affected ZIP codes. Performance is tracked with audit logs, time-to-action metrics, and after-action reviews, while public-facing views maintain trust through transparent methods and confidence intervals. The result is a faster, cheaper containment loop that keeps essential services open and focuses scarce resources where they prevent the most harm.

In Summary

As health agencies weave together electronic records, wastewater readings and mobility trends, data analytics is shifting from pilot projects to core public health infrastructure. The promise is faster detection, more precise interventions and better use of limited resources.

The hurdles are clear: standardizing inputs across jurisdictions, protecting privacy, sustaining funding and building a workforce that can interpret models and communicate uncertainty. New tools powered by artificial intelligence and real-time computing raise the stakes, making the next test operational-turning early warnings into timely decisions the public understands.

With pathogens evolving and climate and travel reshaping risk, data will remain central to disease control. The measure of success is simple-fewer cases and quicker containment-but reaching it will depend on trust, governance and the capacity to act on what the numbers reveal.