Social media platforms are increasingly turning to artificial intelligence to police the explosive growth of video content, deploying machine-learning systems to flag violence, hate speech, misinformation, and copyright violations at scale. Advanced computer vision and audio models now scan uploads and live streams in real time, routing suspected violations to automated filters or human moderators within seconds.

The shift reflects both volume and urgency: short-form video has eclipsed text in many feeds, deepfakes and synthetic media are easier to produce, and regulators are demanding faster, more transparent enforcement under measures such as the European Union’s Digital Services Act. Platforms say AI improves speed and coverage across languages and formats, and can catch patterns human teams miss.

But the technology remains imperfect. Systems can misread context, satire, and cultural nuance, raising risks of over‑removal and bias, while determined actors adapt to evade detection. Creators complain of inconsistent takedowns and slow appeals, and civil liberties groups warn of opaque decisions with limited recourse. As elections and major events test the integrity of online video, the industry is betting that smarter algorithms-paired with human review-can keep pace. Whether they can do so without chilling legitimate speech is the question now confronting companies and policymakers alike.

Table of Contents

- AI steps up in policing social media video as platforms struggle with volume and speed

- Bias blind spots and adversarial attacks expose limits prompting calls for public benchmarks independent audits and clear metrics

- Action plan for platforms pair multimodal detection with human review add rapid appeals publish error rates and adopt on device screening for privacy

- Future Outlook

AI steps up in policing social media video as platforms struggle with volume and speed

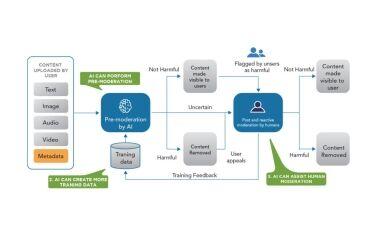

Confronted with surging upload volumes and real-time streams, major platforms are accelerating deployment of automated review that inspects frames, audio, and on-screen text pre- and post-publication, routing high-risk clips to limited human teams while throttling distribution of suspected violations. Vision models fused with speech-to-text and OCR flag violence, hate symbols, doxxing, and self-harm cues; perceptual hashing hunts re-uploads; and retrieval-augmented policy engines align decisions with evolving rulebooks. Despite faster takedowns and broader coverage, precision gaps persist around satire, newsworthy context, and activism, even as evaders tweak filters, crops, and synthetic audio to slip past detectors. Regulatory pressure-from the EU’s DSA to emerging national codes-now demands measurable speed, auditability, and appeal paths, pushing investment in transparency dashboards, crisis protocols, and clearer user notices.

- Multimodal detectors combine computer vision, ASR, and OCR to score risk across visuals, speech, and embedded text in near real time.

- Policy-aware LLMs translate nuanced rules into machine-readable checks, reducing inconsistent enforcement and improving explainability in notices.

- Live stream safeguards introduce automated delays, age gates, and interstitials while escalating likely violations to human moderators within seconds.

- Provenance signals such as C2PA metadata and watermarking help distinguish authentic footage from manipulated or synthetic clips at scale.

- Human-in-the-loop review focuses on borderline cases and appeals, with calibrated thresholds to curb both false positives and missed harms.

- Adversarial resilience uses continuous red-teaming and similarity hashing to counter evasion tactics like cropping, pitch-shifting, and filter abuse.

Bias blind spots and adversarial attacks expose limits prompting calls for public benchmarks independent audits and clear metrics

As platforms expand automated policing of videos, researchers and watchdogs report that skewed training data and opaque thresholds are producing systematic blind spots, while low-cost adversarial edits-noisy audio, fast crops, text overlays, and style transfers-consistently bypass detectors; the pattern has intensified calls for standardized scrutiny that can be compared across vendors and time, rather than marketing claims or cherry-picked demos.

- Public benchmarks: Open, regularly refreshed suites spanning languages, dialects, lighting/skin tones, and video genres, with adversarial stress tests and sequestered test sets to curb overfitting.

- Independent audits: Third-party access to models, logs, and sampling methods; publication of audit notes and remediation timelines; structured red-teaming beyond vendor-run exercises.

- Clear metrics: Class- and subgroup-level precision/recall, calibration error, attack success rates, time-to-correct after appeals, and measurable trade-offs between safety and over-removal.

- Accountability reporting: Routine release of takedown reason codes, escalation pathways, and the share of moderator overrides to surface systematic bias or brittleness.

- Drift and robustness monitoring: Continuous evaluation under distribution shift, with public alerts when model performance degrades on emerging formats or manipulated content.

Action plan for platforms pair multimodal detection with human review add rapid appeals publish error rates and adopt on device screening for privacy

As short-form video dominates feeds, platforms are shifting from opaque, AI-only takedowns to verifiable, layered workflows that balance speed with accountability and privacy, with the following operational blueprint gaining traction across the industry.

- Blend multimodal detection with human review: Vision, audio, OCR, and metadata models triage content; expert moderators adjudicate edge cases, satire, and cultural nuance; risk-tiered thresholds slow virality for uncertain items pending review.

- Guarantee rapid appeals: In-line, one-tap appeals with clear SLAs (e.g., 24 hours for most cases, 2 hours for live incidents); restore reach and analytics when decisions are reversed; give case-level rationales and notify impacted uploaders and reporters.

- Publish error rates: Monthly dashboards disclosing false positives/negatives and precision/recall by language, region, and content type; include confusion matrices, drift indicators, and reviewer agreement metrics; back findings with independent audits and red-team tests.

- Adopt on-device screening for privacy: Optional, privacy-preserving pre-upload checks that flag risks without sending raw media to servers; leverage secure enclaves, federated learning, and differential privacy; use cryptographic transparency logs to prove policy conformance.

- Build accountability guardrails: Standardize taxonomies; maintain appeal transcripts for oversight; offer researcher access through privacy-safe APIs; prioritize child safety, crisis misinformation, and coordinated abuse while protecting journalism and human-rights documentation.

Future Outlook

As short‑form video and live streams continue to dominate social platforms, the industry’s reliance on AI to triage and police content is set to deepen. Multimodal systems promise faster detection of harm at scale, yet the hardest calls-context, satire, newsworthiness, and local norms-remain stubbornly human. With regulatory regimes tightening and elections on the horizon, platforms face pressure to prove not only speed but due process: clearer policies, measurable accuracy, meaningful appeals, and independent audits.

The path forward points to a hybrid model. AI will shoulder more of the first pass-flagging deepfakes, coordinating watermark and provenance checks, and prioritizing urgent risks-while specialized human teams handle edge cases and accountability. Success will hinge on transparency about training data and error rates, broader support for under‑represented languages and dialects, and researcher access that can validate claims without compromising privacy. For companies, the calculus is shifting from mere takedown volume to demonstrable reductions in real‑world harm and moderator burnout.

The tools are improving, and so are adversaries. Whether AI ultimately strengthens trust in social video will depend less on promises of automation than on sustained investment in safeguards, cross‑industry standards, and the willingness to show the public how these systems work-and when they don’t.