Artificial intelligence is moving from the back room to the front line of satellite imaging. Once confined to cleaning up pictures after they reached Earth, AI now helps decide what satellites capture, which pixels get prioritized for downlink, and how quickly analysts can act on what they see. The shift is reshaping a market that spans disaster response, climate monitoring, agriculture, insurance and defense, as constellations multiply and revisit rates shrink from days to minutes.

The drivers are practical as much as technical. A surge in high-resolution optical, radar and hyperspectral sensors is producing more data than ground stations can handle. Edge AI running on orbit reduces the downlink bottleneck by compressing, triaging and labeling imagery in real time; on the ground, models detect change, track ships and aircraft, fill cloud gaps and boost resolution, cutting analysis times from hours to near-instant alerts. Operators say the payoff is faster decisions and lower costs across missions, from wildfire mapping to sanctions enforcement.

With the gains come questions. Accuracy under adverse conditions, model bias, adversarial spoofing and opaque training data raise risks for sectors that increasingly treat satellite analytics as evidence. Regulators and customers are pressing for transparency, auditability and clearer rules on dual-use applications. How the industry answers may determine who leads the next phase of Earth observation.

Table of Contents

- AI moves from image capture to decision support in satellite operations

- Put inference in orbit to cut latency bandwidth use and speed disaster response

- Demand transparent data lineage model cards and independent audits in procurement

- Fuse radar optical and thermal data with physics informed models for resilient mapping

- Concluding Remarks

AI moves from image capture to decision support in satellite operations

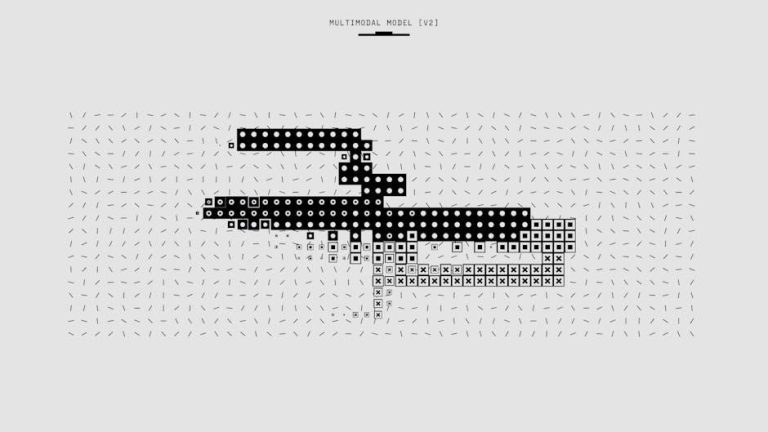

Satellite programs are rapidly integrating onboard inference and ground-based model pipelines to transform raw pixels into operationally relevant cues. Instead of sending everything to Earth, spacecraft now triage scenes in orbit, flagging anomalies, prioritizing targets, and shaping downlink decisions. The result is faster latency from collect to insight, reduced bandwidth pressure, and more agile tasking across constellations. Early deployments highlight a shift from post-facto analysis to continuous situational awareness, where models collaborate across sensors-optical, SAR, hyperspectral-to deliver fused, mission-ready context.

- Onboard change detection to spotlight emerging activity and suppress static backgrounds

- Quality screening for blur, stray light, and off-nadir distortion before downlink

- Cloud, shadow, and haze filtering to conserve capacity for high-utility scenes

- Tip-and-cue across satellites for rapid follow-up collections

- Region-of-interest encoding that compresses what matters and discards noise

Beyond imagery, AI is informing flight dynamics, network scheduling, and operations-center workflows with probabilistic recommendations and transparent audit trails. Models now propose maneuver windows, optimize ground-station contact plans, and generate value-of-information estimates that align collections with mission priorities. Governance is maturing in parallel: operators are introducing human-in-the-loop guardrails, model provenance, and scenario stress-testing to maintain reliability under orbital clutter, contested RF environments, and shifting weather regimes.

- Conjunction risk scoring with ranked maneuver options and fuel trade-offs

- Dynamic retask recommendations that weigh weather, angle, and downstream demand

- Downlink queue optimization by mission value, latency targets, and user SLAs

- Sensor-mode selection (e.g., SAR vs. optical) based on predicted scene utility

- Explainable recommendations with confidence bands and traceable model inputs

Put inference in orbit to cut latency bandwidth use and speed disaster response

Satellite operators are moving analytics from ground to orbit, running onboard inference that converts imagery into compact events and vectors before any downlink. By transmitting decisions rather than pixels, missions are reporting materially lower bandwidth consumption, reduced latency for time‑critical alerts, and higher system resilience when ground passes are congested or interrupted. Hardware accelerators now baked into smallsats and high‑throughput platforms support vision models for change detection, object tracking, and segmentation, enabling near-real-time tasking loops and machine‑to‑machine cueing across constellations.

- Data minimization: Insights, masks, and geotagged features replace multi‑gigabyte frames.

- Prioritized downlink: Confidence‑scored events automatically preempt routine traffic.

- Autonomous tip‑and‑cue: One satellite flags anomalies; another retasks for higher resolution.

- Resilience: Store‑and‑forward alerts survive link outages and contested spectrum.

Emergency managers are already testing these pipelines for wildfires, floods, and earthquakes, where seconds matter and fiber‑backhaul may be damaged. Trials indicate faster situational awareness and leaner ground workloads: triaged heat signatures become dispatchable polygons; SAR classifiers flag landslides through clouds; maritime models mark slick boundaries for rapid containment. Operators are pairing edge analytics with governance guardrails to ensure reliability at scale.

- Field impact: Minutes‑scale anomaly alerts, simplified overlays for incident command, fewer false positives via multi‑sensor fusion.

- Model operations: Signed over‑the‑air updates, calibration against ground truth, and auditable inference logs.

- Interoperability: Standards‑based vectors (GeoJSON, STAC) stream directly into GIS and CAD systems.

- Safeguards: On‑orbit filtering supports privacy‑by‑design and reduces transmission of sensitive scenes.

Demand transparent data lineage model cards and independent audits in procurement

Government buyers and major geospatial firms are tightening procurement standards for AI that interprets orbital data, pushing vendors to disclose how training inputs are sourced, cleaned, and governed across satellite imaging pipelines. Contracts increasingly require model cards that detail data lineage and operational limits so decision-makers in disaster response, agriculture, and defense can verify claims before deploying automated mapping or detection at scale.

- Provenance and licensing: source sensors, collection dates, geo-coverage, resolution, and usage rights for each dataset and any synthetic data.

- Preprocessing and augmentation: cloud masking, atmospheric correction, SAR speckle handling, tiling, class balance, and de-noising steps.

- Lineage graph: transformations, versioned datasets, and model checkpoints linked to training runs and retraining triggers.

- Known failure modes: sun glint, snow/ice, night-time thermal gaps, coastal ambiguity, and low-shot classes such as rare infrastructure.

- Security posture: supply-chain integrity, data residency, cross-border transfers, and watermarking or tamper detection for imagery.

In parallel, buyers are commissioning independent audits to verify performance claims and surface risk, bias, and compliance issues-an approach mirroring financial assurance more than tech marketing. Audit scopes now extend beyond headline accuracy to stress tests on contested terrain, export-control checks, and incident reporting, with remedies and termination clauses for vendors that fail ongoing surveillance.

- Robustness: evaluation across seasons, polar regions, sensor mix (EO/SAR), varying off-nadir angles, and cloud-heavy scenes.

- Fairness and impact: error rates by geography and land class; assessments of downstream effects on communities and critical infrastructure.

- Privacy and legal: consent and licensing, civil-military dual-use review, embargoed areas, and export controls adherence.

- Operational metrics: latency, drift monitoring, false alarm costs, and service-level triggers for retraining.

- Governance: red-teaming results, model change logs, carbon accounting for training, and 72-hour disclosure windows for material failures.

Fuse radar optical and thermal data with physics informed models for resilient mapping

Satellite programs are moving from single-sensor pipelines to integrated stacks where AI aligns synthetic aperture radar, multispectral imagery, and longwave signals under a shared physical scaffold. By embedding radiative transfer, sensor geometry, and surface energy balance constraints into learning, these systems suppress scene ambiguity from clouds, smoke, or nightfall and deliver consistent, gap-tolerant maps. The result is analysis-ready layers that reduce artifacts, track rapid change, and preserve provenance from raw measurements to derived products.

- All-weather continuity: SAR penetrates clouds while optical and thermal refine material properties and temperature gradients.

- Physics-regularized fusion: Models honor conservation laws and instrument noise, limiting overfitting and false positives.

- Cross-sensor co-registration: Sub-pixel alignment pairs backscatter, reflectance, and emissivity for stable time series.

- Uncertainty at the pixel level: Per-pixel confidence flags guide triage in disaster and infrastructure monitoring.

- Operational transparency: Audit trails link calibrations, atmospheric corrections, and fusion weights to each release.

Applied at scale, this approach strengthens situational awareness across flooding, landslides, wildfire recovery, urban heat, and crop stress-domains where temporal reliability and physical interpretability matter. Agencies and enterprises report faster tasking-to-insight cycles, smoother handoffs into GIS, and models that transfer across regions and seasons with fewer manual tweaks, as edge-to-cloud workflows stream fused layers into dashboards and automated alerts.

Concluding Remarks

As launch costs fall and sensors diversify, AI is moving upstream in the imaging chain, from tip-and-cue tasking to on‑orbit triage. Gains in cadence and coverage are reshaping expectations across government and industry. But the same tools raise questions about provenance, bias, and dual‑use risk, forcing operators and regulators to revisit standards for transparency, auditing, and export controls.

The next phase will test whether edge processing, multimodal fusion across optical, SAR and hyperspectral data, and increasingly large models can deliver trusted, timely insights at scale. With climate shocks, conflict zones and supply chains all in view, the stakes are immediate. What emerges in the coming years will determine not only who leads the sector, but how much the public trusts what satellites report about the world below. The trajectory is clear: AI will not just analyze satellite images; it will help decide which ones to capture-and when.