Artificial intelligence is stepping out of the chat window and into the interface itself. A new wave of voice-first, vision-capable systems is moving onto phones, PCs and wearables, promising computers that see what users see, hear what they say and act across apps without a click. Tech giants and startups alike are racing to embed generative models deep into operating systems and everyday tools, betting that hands-free, context-aware assistance will redefine how people search, work and navigate the world.

The shift could make computing more natural and more accessible, collapsing menus and keywords into conversation and gesture. It also raises high-stakes questions about privacy, reliability and control as always-on agents learn from sensitive personal data and automate tasks at scale. With regulators drafting new rules and companies pushing rapid deployments, the next phase of human-computer interaction is arriving fast-and bringing the biggest redesign of the user interface in decades.

Table of Contents

- AI Agents Move to the Front End as Interfaces Shift from Clicks to Conversations

- Multimodal Interaction Blends Voice Vision and Gesture to Reduce Friction and Expand Access

- Trust Becomes the New UX with Privacy by Design Transparent Reasoning and On Device Processing

- Action Plan for Teams Prioritize Explainability Choose Task Specific Models Pilot Edge Inference and Test Accessibility

- The Way Forward

AI Agents Move to the Front End as Interfaces Shift from Clicks to Conversations

Product teams are retooling interfaces around dialogue rather than taps, embedding assistants directly into the primary canvas of apps. Major platforms are rolling out SDKs that let agents observe page context, invoke tools, and coordinate tasks across services, while UX patterns add chat panes, voice capture, and context chips to core workflows. Early enterprise pilots target support, search, and configuration-areas where multimodal inputs and context-aware actions reduce friction-yet they also surface questions about transparency, reliability, and provenance. Policy scrutiny is rising as on-device signals mingle with cloud models, pushing vendors to document data flows, latency trade-offs, and safety controls.

- Interface shifts: chat-first panels, voice controls, and inline summaries replace deep navigation.

- Stack changes: client-side tool calling, server-orchestrated functions, and session memory grounded in company data.

- Runtime choices: hybrid inference (edge + cloud) to balance privacy, cost, and response speed.

- Accountability: visible citations, action previews, and user-managed logs to track what agents did and why.

As assistants take the lead in user journeys, KPIs shift from click-through to turn quality, containment, and handoff success. Design now borrows from operations playbooks: deterministic fallbacks, consent prompts for data access, and post-action receipts. To keep interactions trustworthy, teams are hardening tool schemas, tightening retrieval sources, and adopting continuous evaluation. Cost and accessibility pressures are moving summarization and intent parsing closer to the device, while regulated sectors demand explainability and auditable trails. The competitive edge increasingly comes from orchestration discipline and governance as much as model choice.

- Instrument rigorously: track latency per turn, error modes, and fallback rates; sample and review transcripts.

- Constrain behavior: strict tool contracts, verified sources, and layered guardrails to prevent overreach.

- Design for handoffs: one-click exits to traditional UI when confidence drops, with clear state transfer.

- Protect privacy: minimize collection, favor on-device processing where feasible, and expose granular controls.

- Document impact: action previews, citations, and audit logs that meet compliance and user expectations.

Multimodal Interaction Blends Voice Vision and Gesture to Reduce Friction and Expand Access

Voice, computer vision, and gestures are converging into a single, fluid interface that lets systems perceive context and intent at once. Early pilots show faster task completion, fewer taps, and broader accessibility as assistants understand what the camera sees, what the user says, and how they move. In sectors from retail to healthcare and automotive, this reduces input bottlenecks, supports hands-busy scenarios, and opens services to people who struggle with keyboards or small screens.

- Voice: hands-free control in noisy or constrained environments, with natural confirmations and error recovery.

- Vision: object and scene awareness to ground commands (“this” or “that”), streamline scanning, and verify steps.

- Gesture: subtle, privacy-preserving cues-nods, points, and swipes-when speaking is impractical.

- Accessibility: support for low literacy, motor variability, and multilingual use; pathways for sign-language recognition and real-time captioning.

Behind the scenes, the stack is shifting to on‑device multimodal inference for lower latency and stronger privacy, while edge/cloud orchestration handles complex scenes. Vendors are standardizing sensor fusion and intent arbitration to resolve conflicts between inputs, and design teams are adopting new UX metrics that weigh time-to-action, confidence, and error recoverability across modalities. Regulators and accessibility bodies are also pressing for transparent data handling and inclusive defaults.

- Privacy by default: selective capture, ephemeral buffers, and clear recording indicators.

- Robustness: fallback paths when audio drops, hands are occupied, or lighting degrades.

- Discoverability: lightweight prompts that teach available voice phrases, camera cues, and micro-gestures.

- Interoperability: aligning with web accessibility roles and emerging multimodal APIs to ensure consistent behavior across devices.

Trust Becomes the New UX with Privacy by Design Transparent Reasoning and On Device Processing

Major platforms are retooling interfaces around privacy-by-design, making trust as tangible as speed or aesthetics. With dedicated silicon enabling on-device processing, sensitive data stays local, while transparent reasoning turns black-box outputs into verifiable claims. The result is a shift from opaque personalization to accountable assistance, where disclosures, proof points, and performance coexist without compromise.

- Data stays local: inference, redaction, and summarization run on the device; only aggregates move.

- Explainability at a glance: output rationales, source attributions, and risk flags presented in-line.

- Consent that counts: granular controls with live previews and revocable scopes.

- Secure compute paths: enclaves, differential privacy, and federated updates as default posture.

Product roadmaps are aligning with measurable trust KPIs: opt-in rates, privacy incident zeroes, and user-verified explanations. Compliance becomes a feature, not a footnote, as teams instrument audit-ready logs, signed model artifacts, and policy-aware prompts to meet regional rules without degrading UX. Analysts expect UI patterns that surface provenance and risk alongside answers, normalizing a “show your work” standard for every AI touchpoint.

- Operational guardrails: purpose limitation, data minimization, and deletion SLAs tracked in dashboards.

- Verifiable provenance: content credentials and model signatures exposed to users and auditors.

- Latency without leakage: edge-first design paired with selective, encrypted fallback to cloud.

- Trust telemetry: user overrides, confidence bands, and red-team findings fed back into release gates.

Action Plan for Teams Prioritize Explainability Choose Task Specific Models Pilot Edge Inference and Test Accessibility

Teams are moving from aspiration to execution, building playbooks that make model decisions legible, match architectures to narrowly defined jobs, bring inference closer to users, and verify inclusive use by design. To operationalize this shift, leaders are adding governance artifacts-model cards tied to business KPIs, prompt/change logs, and reproducible evaluation harnesses-alongside procurement checklists for domain-tuned models, privacy-by-default data flows, and energy and latency budgets that reflect real-world constraints.

- Explainability first: surface rationale snippets in the UI, attach evidence traces to critical outputs, log counterfactual tests, and track an “explanation satisfaction score” from user feedback.

- Task-fit over one-size-fits-all: favor small, fine-tuned or distilled models for well-scoped jobs; run side-by-side benchmarks on domain-specific sets for accuracy, cost, and p95 latency.

- Edge inference pilots: A/B on-device vs. cloud for privacy, resilience, and speed; monitor thermal and battery impact; set OTA update and rollback procedures with signed models.

- Accessibility as a blocking criterion: audit against WCAG 2.2; validate keyboard-only flows, screen-reader semantics, captions, haptics, and accent/dialect robustness in voice features.

Execution metrics tighten the loop: rationale coverage (% of critical decisions with explanations), ECE/calibration drift, on-device p95 latency and offline uptime, screen-reader task success rates, and failure taxonomy closure per sprint. Cross-functional squads-product, legal, security, and accessibility-run privacy impact assessments, threat models (prompt injection, model exfiltration), and red-teaming before expansion gates. A kill-switch, incident playbooks, and clear data-retention limits keep pilots safe while informing scale-up, positioning teams to meet regulatory expectations and sustain user trust at launch.

The Way Forward

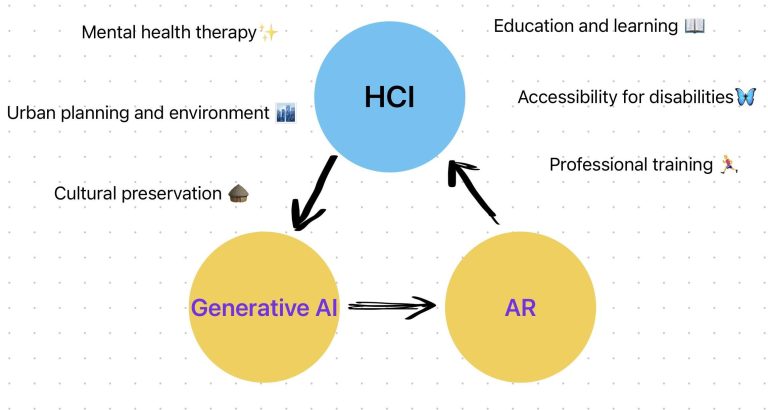

As AI moves from a backend capability to the front line of interaction, the stakes extend beyond novelty. Interfaces are shifting from clicks and taps to conversations, context, and intent-promising productivity gains and broader accessibility while raising unresolved questions about privacy, bias, accountability, and who controls the new interaction layer. With platforms embedding multimodal assistants and enterprises piloting agentic workflows, the near term will be defined as much by standards, safeguards, and UX choices as by model size.

Regulators, developers, and device makers now face a common test: can AI-driven interfaces be made reliable, transparent, and interoperable enough to earn trust at scale? The answer will shape whether the next generation of computing feels ambient and empowering-or intrusive and brittle. For users and businesses alike, the outcome is no longer about features but about governance and design. As the line between tool and collaborator blurs, the future of human-computer interaction will be decided at the intersection of policy, engineering, and everyday use.