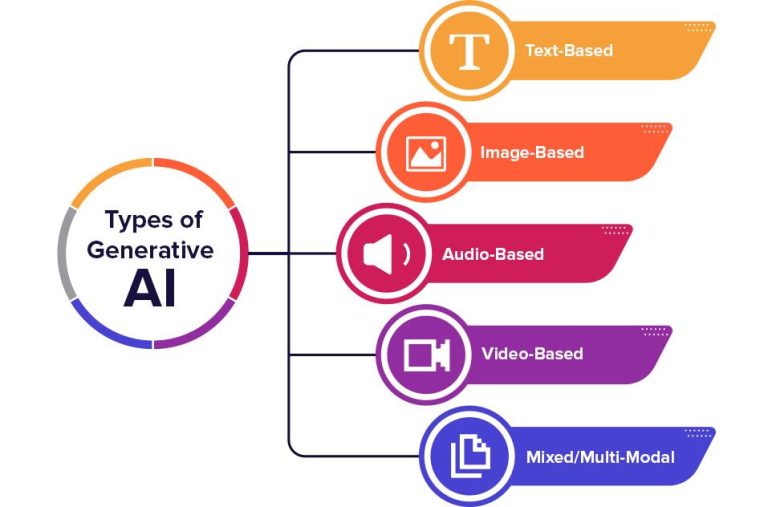

A fresh wave of generative AI models is rippling through the tech industry and into office workflows, redrawing product road maps, budgets and job descriptions. From flagship releases at major platforms to fast‑moving open‑source alternatives, systems that can compose text, code, images and audio are moving from glossy demos to day‑to‑day deployment.

The acceleration is reshaping competitive dynamics and the cost structure of computing, driving investment in chips and data centers while triggering legal battles over training data and intellectual property. Employers are rolling out AI “copilots” across software development, customer support and marketing, reporting efficiency gains alongside persistent concerns about accuracy, bias and security.

As the tools become cheaper and more capable, the question for companies and policymakers is shifting from whether to adopt AI to how to govern it-balancing innovation with oversight, and augmentation with the risk of displacement.

Table of Contents

- Generative models move from lab to workload engine across cloud and enterprise

- Jobs change task by task as roles blend prompting new upskilling playbooks

- Infrastructure pivots to specialized chips vector databases and retrieval to cut cost and latency

- What leaders should do now invest in data quality fine tuning guardrails and frontline training

- To Conclude

Generative models move from lab to workload engine across cloud and enterprise

Once experimental, now essential: foundation and domain-tuned models are being wired directly into production systems, stitched into CI/CD, and exposed as policy-governed services across hybrid clouds. Cloud providers are rolling out optimized runtimes, vector-native storage, and caching layers, while enterprises place inference close to data for latency, cost control, and compliance. The architecture playbook is shifting from ad hoc demos to resilient pipelines with RAG, guardrails, and observability, enabling multi-model routing across GPUs, CPUs, and NPUs based on price/performance and data sensitivity.

- From prototypes to SLAs: uptime, latency, and rollback baked into model endpoints

- From prompts to pipelines: orchestration, vector search, and deterministic tools unify flows

- From single-model to portfolio: task-specialized and distilled models reduce cost without sacrificing quality

- From cloud-only to hybrid: on-prem inference for sovereignty, edge for immediacy

Operations lead the narrative: security teams enforce data boundaries, legal codifies usage policies, and FinOps tracks token spend alongside GPU utilization. Early wins are consolidating around customer support, document automation, and developer productivity, but the competitive frontier is governance and measurement-continuous evals, bias audits, and safety testing that keep systems reliable at scale. With standards maturing around model cards, content provenance, and enterprise policy kits, the center of gravity is shifting to repeatable delivery of business outcomes.

- Production patterns: retrieval pipelines, structured output, and tool calling for deterministic actions

- Risk controls: layered filters, red-teaming, and audit trails integrated into workflows

- Cost discipline: caching, batching, quantization, and workload-aware routing

- Talent shift: platform teams standardize SDKs, eval harnesses, and golden datasets for reuse

Jobs change task by task as roles blend prompting new upskilling playbooks

As generative systems spread across toolchains, employers are breaking jobs into measurable tasks and rebundling them around outcomes. With task-level automation standardizing drafting, summarization, QA, and outreach, teams are hiring for role convergence-hybrids who can orchestrate models, data, and domain expertise. Procurement is shifting from headcount to capability, and compensation is beginning to track impact per task rather than legacy titles.

- Data-literate marketers who prompt, evaluate, and fine-tune generators against brand guardrails.

- AI-fluent product managers translating user intents into retrieval strategies and evaluation suites.

- Compliance engineers embedding auditability, red-teaming, and policy-as-code into ML pipelines.

- Support analysts curating feedback loops and knowledge graphs to lift first-contact resolution.

Learning teams are replacing static curricula with dynamic upskilling playbooks tethered to live workflows. Companies are instrumenting tasks with telemetry, mapping gaps with skills graphs, and delivering micro-challenges inside the tools employees already use. Governance is embedded: evaluation harnesses, content filters, and human-over-the-loop checkpoints are becoming standard operating controls.

- Skills heatmaps tied to task inventories to target training spend.

- In-product coaching with templated prompts, critique rubrics, and safe defaults.

- Outcome metrics-time-to-draft, review cycles, incident rates-to validate ROI.

- Internal gig markets routing work by verified skills, not org charts.

Infrastructure pivots to specialized chips vector databases and retrieval to cut cost and latency

As model footprints swell and demand spikes, cloud and enterprise stacks are shifting toward domain-specific accelerators and smarter serving paths to keep economics in check. Providers are leaning on lower precision (8/4-bit), sparsity, operator fusion, and high-bandwidth interconnects (NVLink, PCIe Gen5, CXL) to compress cost per token while stabilizing p95 latency. Power and supply constraints are pushing inference closer to data-at the edge and in private regions-backed by SLA-aware schedulers that balance throughput and tail performance across heterogeneous fleets of TPUs, MI300s, and inference-first silicon like Inferentia and NPUs.

- Training vs. inference split: Heterogeneous fleets pair high-memory trainers with low-cost decoders for steady-state serving.

- Memory-first designs: SRAM/HBM capacity is the bottleneck; CPU offload and KV-cache paging reduce GPU minutes.

- Aggressive quantization: 8/4/2-bit pipelines and QAT-aware models preserve quality while cutting FLOPs and bandwidth.

- Throughput-aware scheduling: Dynamic batching and speculative decoding smooth tail latency under bursty load.

- Transparent economics: Teams track $/1M tokens, tokens/watt, and p99 latency as first-class SLOs.

On the data plane, organizations are standardizing on vector-native storage and retrieval to ground generations in proprietary knowledge while shrinking context lengths. Approximate nearest neighbor algorithms (HNSW, IVF-PQ) and hybrid dense-sparse ranking prune prompt tokens, while semantic caches eliminate duplicate calls. Freshness and governance are built in: near-real-time indexing, lineage, and redaction satisfy compliance, and observability traces the end-to-end path from document chunk to final answer to counter drift and hallucinations.

- RAG by default: Domain docs, features, and telemetry are chunked, embedded, and retrieved to narrow prompts.

- Hybrid retrieval: Dense vectors plus BM25/lexical signals lift recall and factuality over single-mode search.

- Cost controls: Context compression, rerankers, and cache hits cut spend and p95 latency by double digits.

- Operational rigor: CI for indexes, embedding drift detection, and shadow tests protect quality at scale.

- Privacy-aware pipelines: PII filters, row-level ACLs, and tenant isolation keep regulated data out of prompts.

What leaders should do now invest in data quality fine tuning guardrails and frontline training

With generative systems moving from pilots to production, executives are reallocating budgets toward durable capabilities that make outputs reliable, compliant, and useful at the edge of operations. The near-term focus is shifting from headline models to the plumbing that sustains them-governed datasets, repeatable evaluation, and enforceable controls-so that customer-facing teams can deploy AI without amplifying errors or risk.

- Data quality: consolidate sources under clear ownership, implement schema standards and lineage, run continuous validation, flag synthetic data, de-bias sensitive attributes, and protect PII end‑to‑end.

- Model refinement: tune with curated, permissioned corpora; use retrieval augmentation for freshness; adopt scenario-based evals; and track metrics beyond accuracy-hallucination rate, safety violations, latency, and cost per 1K tokens.

- Guardrails: apply policy-tuned prompts, allow/deny lists, safety classifiers, rate limiting, jailbreak detection, red-team exercises, content watermarking, and immutable audit logs.

- Frontline enablement: deliver role-based training, workflow playbooks, approved disclosure language, escalation paths, and feedback loops that feed back into data pipelines and model updates.

Boards are asking for evidence that these controls translate to business value. Leaders are formalizing AI governance councils, embedding risk reviews in procurement, and tying funding to measured outcomes-reduction in rework, faster case resolution, higher CSAT, and fewer compliance findings. Clear KPIs, staged rollouts (from sandbox to canary to general availability), and transparent incident reporting are emerging as the operational backbone that turns experimentation into accountable, repeatable performance.

To Conclude

For now, the acceleration shows little sign of slowing. Tech giants and startups alike are racing to ship larger models and cheaper, task‑specific tools, even as regulators weigh new guardrails and enterprises grapple with integration costs, data risks, and a widening skills gap. Compute supply, copyright liability, and model reliability remain choke points that could determine who turns early momentum into durable advantage.

What comes next will hinge on execution more than ambition. If pilots translate into measurable productivity and safer deployments, generative systems could become standard infrastructure across offices, studios, and codebases. If not, a reset may follow the current surge. Either way, the decisions made in the coming quarters-by developers, customers, and policymakers-will shape how much of today’s promise becomes tomorrow’s routine.